Big Data Advances Ability to Automatically Segment Breast Tumors

Potential future uses include assistance in diagnosis and prediction

Breast tumors can be quite variable in size and appearance, so precisely outlining their extent and margins on an MRI image can be helpful in both treatment planning and monitoring treatment response or tumor progression. However, this outlining—or segmenting of images—is not an easy task, particularly for an entire volume covering many image slices.

Automatic segmentation of breast tissue in MRI is a two-step process, where the breast area has to be separated from the chest wall and then the breast tissue has to be segmented into fibro-glandular, fatty tissues and tumor tissues.

“Because manual segmentation is extremely labor intensive, its use for routine clinical evaluation has been limited—despite the mounting evidence of its clinical utility,” says Lucas Parra, PhD, professor of biomedical engineering at the City College of New York. “Automatic segmentation using modern deep network techniques has the potential to meet this important clinical need,” Dr. Parra added.

Creating Deep Networks Requires Lots of Data

The challenge with automation is that the available MRI datasets used to train automatic segmentation algorithms have remained comparatively small, typically consisting of just 50 to 250 MRI examinations. Not only does this significantly limit the potential of the deep networks, it also makes comparison with radiologist performance difficult. In fact, in one comparative study, radiologists outperformed the networks.

“Before deep learning networks can do what you want them to do, you need to teach them, and that requires lots and lots of data,” Dr. Parra said.

To get that data, Dr. Parra teamed up with Elizabeth Sutton, MD, associate attending breast radiologist at Memorial Sloan Kettering Cancer Center (MSK).

“As a leading center for cancer treatment, MSK has access to a substantial amount of data,” Dr. Parra said. “Leveraging this unprecedentedly large dataset, we developed a deep convolutional neural network capable of providing fully automated, radiologist-level MRI segmentation of breast cancer.”

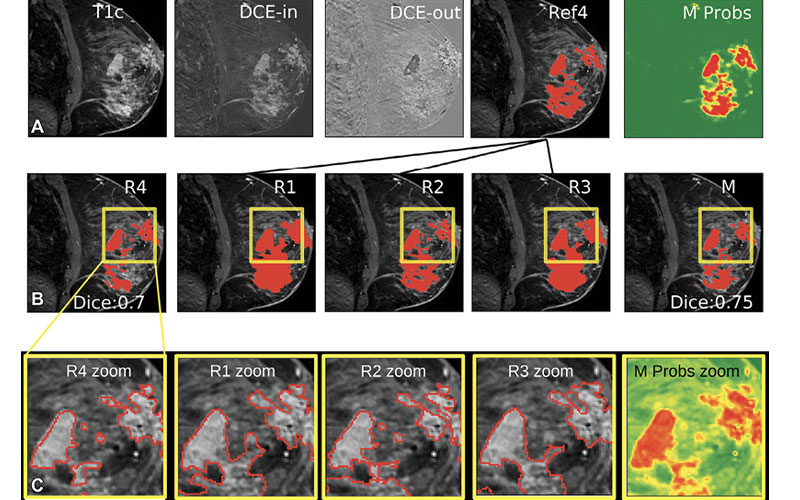

Drs. Parra and Sutton’s study, which was recently published in Radiology: Artificial Intelligence, used 38,229 exams performed in female patients at MSK between 2002 and 2014. Having tested several networks, the study found the highest-performing to be a type of 3D U-Net, an architecture based on the Convolutional Neural Network (CNN), trained using routine clinical dynamic contrast-enhanced MRI.

“When trained on a sufficiently large dataset, a 3D U-Net is able to segment breast cancers with a performance comparable to that of fellowship-trained radiologists,” Dr. Sutton said.

Drs. Sutton and Parra and their team have made all the relevant code and the pretrained network available for open access via Github.

“A fundamental principle of the scientific method is that anyone should be able to arrive at the same result when performing the same experiment. Truth and progress depend on it,” Dr. Parra said. “If AI is to be considered a science, then one has to be able to reproduce published work. The complexity of deep networks pose a serious challenge to reproducibility and progress. To address this change it is imperative to share code. Fortunately, this is really easy to do nowadays.”

Toward Diagnosis and Prediction

Now that they have developed a network capable of automatically segmenting breast tumors, Drs. Parra and Sutton are working to apply it towards diagnosis and prediction.

“Radiologists can look at the image and see a tumor,” Dr. Parra said. “What they can’t do is look at an image and predict what might happen two, three or five years down the road.”

According to Dr. Parra, this is where machine learning can bring real added value to patient care. However, the problem is that at present quantifying risk is very unspecific. It is based on factors such as genetic markers and family history.

“Our current research is based on the hypothesis that utilizing MRI segmentation will allow us to make a better estimation of breast cancer risk. Thus, some women may be advised to come back for a three- or six-month screening, whereas others may not need to come back for another two years,” Dr. Parra said. “In some cases, we may recommend immediate biopsy when the risk is particularly high. This sort of personalized treatment is referred to as ‘risk-adjusted screening’.”

Dr. Sutton, who developed the idea of this process pointed out that there is a flip side to this machine-recommendation.

“We really do not want to miss a tumor because the machine recommended a longer follow-up period,” she said. “Ultimately, there has to be high certainty in this risk prediction.”

For More Information

Access the Radiology: Artificial Intelligence study, “Radiologist-Level Performance by Using Deep Learning for Segmentation of Breast Cancers on MRI Scans."

Access the code and pretrained network at, https://github.com/lkshrsch/Segmentation_breast_cancer_MRI/.

RSNA Organizes AI Challenges to Spur Creation of AI Tools

RSNA organizes AI challenges to enhance the efficiency and accuracy of radiologic diagnoses. These AI data challenges involve collecting substantial volumes of imaging data, annotated by expert radiologists. RSNA collaborates with multiple organizations from around the world to organize these challenges.

RSNA is planning two AI challenges in 2022. The first focuses on detection of fractures in cervical spine CT images and is being conducted in collaboration with the American Society of Spine Radiology. The second will focus on detection and classification of suspected breast lesions in mammography images.

In addition, RSNA is currently working with other organizations to build a repository of COVID19 imaging data for research. The Medical Imaging and Data Resource Center (MIDRC) is an open-access platform for COVID19-related medical images and associated data.

Learn more at RSNA.org/AI-Challenge.